This video is part ofTechXchange:AI on the Edge。You也可以查看更多TechXchangeTalks视频。

NXP半导体provides a range of hardware and software tools and solutions that address edge computing, including support for machine learning (ML) and artificial intelligence (AI). The company's new eBook,Essentials of Edge Computing (PDF eBook),由于第4章而引起了我的注意,该第4章讨论了AI/ML支持。

I was able to talk with Robert Oshana, Vice President of Software Engineering R&D for the Edge Processing business line at NXP, about the eBook and AI/ML development for edge applications. Robert has published numerous books and articles on software engineering and embedded systems. He's also an adjunct professor at Southern Methodist University and is a Senior Member of IEEE.

您还可以阅读视频(下图)的成绩单(下图)。

Links

Interview Transcript

黄:Machine learning and artificial intelligence are showing up just about everywhere, including on the edge where things can get a little challenging. Rob, could you tell us a little bit about machine learning on the edge and why it's actually practical to do now?

Oshana:So first of all, in general, processing provides a certain number of benefits over direct cloud connectivity. One is latency. For example, by processing on the edge, you don't have to send information back and forth to the cloud. So latency is a big concern and when you apply that concept to machine learning, it makes even more sense.

机器学习本身就涉及了大量的数据。You would want to do as much of that inferencing either on the end node or on the edge, as opposed to trying to send information back and forth to the cloud. So latency is clearly a benefit.

另外,连接性,在您实际上与云连接无连接的领域中,有许多用于机器学习的应用程序。我最近参与其中的一对夫妇。一种是医疗,世界上某些类型的医疗物理领域的医疗服务与云没有可靠的连通性,但是您仍然想在那里的边缘进行一些机器学习,以便做出实时智能决策,

以及我在石油和天然气方面有一定经验的另一个领域,在这里,相同的概念在茫茫荒野中,进行一些伐木并查看一堆数据来回来回,您想要那种聪明的ML(机器学习)实时完成。

And if you don't have that connectivity, you know, obviously you're going to be limited. So that's one reason why it's gaining a lot of popularity. When you need that latency real time, and then also you know, when you do machine learning, let's say in the cloud, there's your it's big iron stuff and you're leveraging high-end compute, high-performance computing, multiple GPUs in the whole kind of, you know, just go green, if you will.

Being able to do that more efficiently on the edge, where you've got limited memory but also limited power processing and power, you can do it more efficiently.

It's a great initiative but again, you don't have to leverage all that heavy horsepower in the cloud. These are a couple of reasons why I think it's gaining in popularity if you do this on the edge.

黄:好的。Well, could you talk us a little through the development flow for this?

Oshana:Pretty much the same for the edge as it is on the cloud.

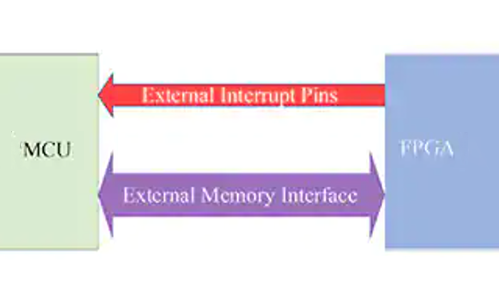

You know it well, in many cases it is this diagram(图。1)I've got here at this high level. The development flow shows the main components of ML (machine learning) development and, you can see, first of all, just by looking at this very quickly, you can see it's pretty complicated.

There's a lot of stuff going on with the data which is shown in the green there, lots of data flow related to the data and also lots of operations with the black arrows, and, overall, it's a pretty complicated picture.

You can start doing this in the cloud or you can do actually much of this on the edge. So our edge-based toolkit eIQ allows developers to actually bring their own model and begin doing a lot of the steps on an edge, like on a PC, and deploy directly to the edge without having to get the cloud involved.

但是,我们知道许多开发人员,科学家都希望从云中开始。实际上,我在这里显示的下一个图是工作流程和边缘ML(机器学习)工具。

它在左侧显示的是,在嵌入式开发人员甚至数据科学家或一些ML专家之间,他们可能想从舒适的云中开始。也许他们有一个非常大的型号来建造,或者他们只是熟悉和自在的云中。

We give them that ability to do that. You can also do very similar things on the edge if you wanted to. We provide both options in the sense that if someone wants to start and build their model in the cloud, using some of the sophisticated tools in the cloud will provide an easy way of deploying that model to the edge. And that's what's shown on the left, through the cloud, down to the edge or even to an end. So we make it easy.

One of the things about these ML flows, as you can see, they're pretty complicated. We're trying to provide an ease of use, kind of an out-of-box experience that makes it a lot easier so you don't have to go through all kinds of detailed steps or you know, everybody doesn't have to be an email expert. When you deploy down to the bottom there where you actually have an actual application and inferencing running on an edge processor, an edge device, or an end node.

This is where we optimize the machine learning, the inferencing, to fit in smaller footprints like memory, to run at low power and also under as few cycles as possible using some of the sophisticated frameworks that are out there today.

黄:您能否谈谈您支持的一些主要AI框架,以及为什么要使用另一个AI框架。

Oshana:让我首先让我开始在这里显示此幻灯片(图2)that shows some of these frameworks. Some of them are pretty, pretty popular like TensorFlow and even TensorFlow Lite, developed by what our approach, by the way, is to leverage some of the best-in-class technology that's out there in the community and in the open community.

So we work very closely with Google, with Facebook for GLOW, which is an ML compiler, but to your point, some of these frameworks, like TensorFlow and then TensorFlow Lite, provide a lot of very good high-performance libraries and also kind of an ease of use of the abstract out of some of the complexity for the developer so they don't have to know the details of some of the algorithms for the needs of that kind of abstraction.

PyTorch, another framework that we support, also provides a level of ease of use with some very sophisticated algorithms for developing certain types of models. When you get down to the inferencing, even something like TinyML, which is optimized to run in very small footprints of memory and processing cycles, is becoming very, very popular.

So one of the things that we look for, and the reason we support several of them, is depending on the type of applications or building the type of sophistication you want and the algorithms, the type of deployment scenario you want from a footprint perspective and ease of use.

我们支持其中几个不同的框架。同样,我们的方法是利用开放社区中一流的一流,然后我们要做的就是优化它们,以非常有效地在不同类型的ML处理元素上运行。

黄:好的。Well, since you had the slide up, can we talk a little bit about the range of AI hardware and what level of sophistication would you find in each level?

Oshana:是的,好的问题。因此,正如您在此图中看到的那样,实际上,我们的方法是支持多种不同类型的处理元素的ML。

因此,换句话说,从左侧开始,如果您想在普通的ARM CPU上进行ML,我们提供了软件堆栈来执行此操作。而且有一些好处,不是那么高效,但仍然有人决定在CPU上运行其ML算法的一部分。

But look at the other ones.

DSP显然固有地以信号处理为导向。甚至恶意软件的根核中的一些都处于信号处理中。因此,我们为DSP提供了一些支持。您知道,一些早期IML始于GPU处理,而GPU具有一些并行性和其他结构,并且已优化,以在GPU上进行某些形式的机器学习。

So we support that on the neural processing units, or I kind of generically call that an ASIC, something that's very optimized to neural-network processing, a multilayer perceptron with an MPU, you can get very specific. As far as the types of algorithms supported, the memory usage, the parallelism.

所以我们支持我们的一些内部的神经ork processing units in order to accelerate a performance on machine learning.

然后,即使是为了完整性,您也可以在视频处理单元上运行,该单元还具有一些优化的机器学习结构。

And I would be remiss if I didn't mention just now that we're talking about hardware, even some some newer technologies like analog ML where you're kind of eliminating the Neumann bottleneck by doing kind of memory and computation on the same device.

这些也表现出了很多希望。因此,只要使用Glow Compiler(用于机器学习)等技术来支持它的硬件,就可以在处理元素的不同元素之间分配一些处理,如果您想并行,我们可以在处理元素的不同元素之间进行一些处理。

因此,我们也非常仔细地研究了这一点,基于客户的用例,因此这是一系列快速的硬件支持。

黄:好的。在谈论优化是否可以给我们一些有关优化如何进行的详细信息,您还能获得哪种优化结果?

Oshana:So the optimizations are done mainly to exploit parallelism where it's possible to computational as well. If you look at something like a multilayer perceptron, there is potentially hundreds of thousands of different computations on the biases and on each of the nodes so we provide parallelism in order to do those computations, but we also are doing some memory optimizations with our neural-network processor.

我们可以做更多的方法来映射和分配内存,并且我们在ML的各个不同阶段使用内存,也可以为内存进行优化。

And then on the power side, most ASIC types or CPU or any custom kind of a block provides the opportunity to use different forms of power gating in order to lower overall power consumption(图3)。

We're benchmarking all three of these performance memory and power to try to get to a kind of a sweet spot of where we think our customers want to be in terms of the different applications they're working on.

在上一页上,要回到底部的Edge ML图片(见图2),您会发现我们关注的领域包括异常检测,语音识别,面部识别以及更多的异常检测,多对象分类。

What we're doing there is we've optimized a lot of our hardware and software for these types of generic type applications, and then we're providing frameworks, software frameworks. We call these software packs to allow a developer to get started very quickly with the software structures, the ingress and egress, and the processing for each of these kinds of key areas in order to get started very quickly.

我们已经优化,软件作为结束ly as possible with the underlying hardware. And that gives us another opportunity to optimize at the software level as well.

黄:Well, excellent. Thank you very much for providing your insights on machine learning on the edge

Oshana:Thank you, Bill.