Despite our murky understanding of how humans form concepts, researchers fromDeepMind—an artificial intelligence company under Google parentAlphabet—have developed a program that appears to bring human and machine learning closer than ever before. In a major breakthrough, the program defeated a top player of Go, an ancient board game that requires a mix of strategy and instinct extremely difficult for computers.

In a competition held last fall, the program, also known as AlphaGo, defeated European champion Fan Hui in five straight games on a full board. The event marked the first time that a computer had beaten a professional under those circumstances. Other programs had previously defeated professionals on smaller, unofficial boards or with other handicaps. The results were published in the journalNaturelast week.

Beating humans at board games has long served as a benchmark for AI research. AlphaGo has widely been compared to IBM’s Deep Blue program, which infamously defeated chess champion Gary Kasparov in 1996. Deep Blue used what is known as brute force processing to defeat Kasparov, combing through all the possible moves in the game and all the possible outcomes of those moves.

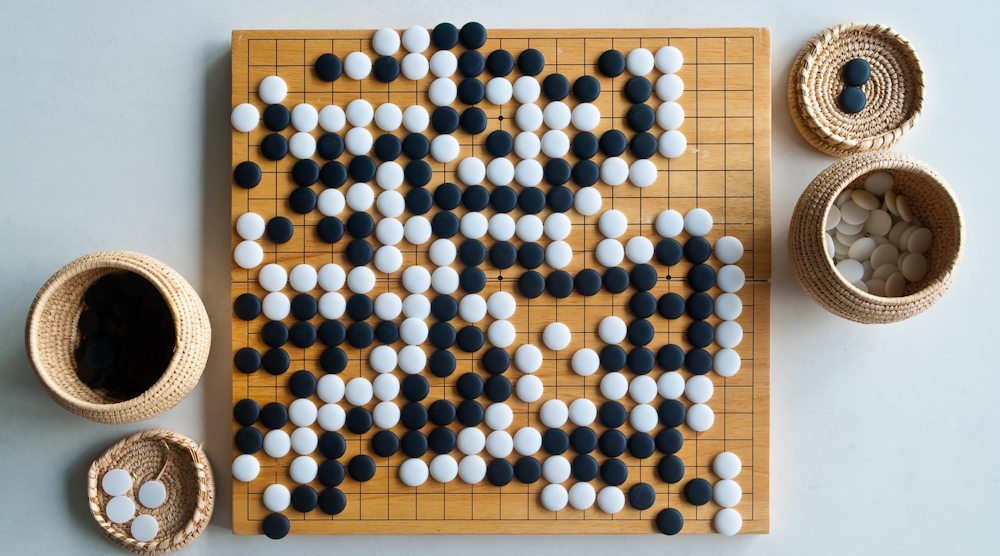

Although brute force processing was an effective approach to chess, it has not worked with Go. The game, which is thought to have originated in China more than 2,500 years ago, involves placing white and black stones on a grid, with each players vying to control more than half the board.

Go is considered one of the hardest tests for AI because there are far more possible moves than in chess. “Another way of viewing the complexity of Go is that the number of possible configurations on the board is more than the number of atoms in the universe,” says Demis Hassabis, chief executive of DeepMind, ina YouTube video.

For that reason, the researchers set out to create a program that could narrow its search area, recognizing patterns and making the kind of intuitive (and almost instinctual) judgments that define how humans approach the game.

Within AlphaGo, the research team combined two different neural networks—programs with millions of interconnected points that attempt to process data like a human brain. These networks employ so-called “deep learning” to help the program form abstract concepts by studying huge amounts of data.

AlphaGo's first neural network analyzed around 30 million Go positions, with humans teaching it which moves to make next. The second program tested what it had learned from watching humans, playing thousands of games against itself. The program taught itself to evaluate board positions and even developed new strategies on its own. While the first part of AlphaGo’s education was supervised by humans, this second part was completely unsupervised.

Unlike brute force programs, this approach to machine learning allowed the research team to restrict the depth of AlphaGo’s tree search. The program only looked about 20 moves ahead, ensuring that the program did not crumble under the weight of so many possibilities.

研究人员将新方法与人类从经验中学习的方式进行了比较,并用新知识做出创造性选择。DeepMind的研究人员David Silver说:“搜索过程本身并非基于蛮力。”“这是基于类似于想象力的东西。”

Using this hybrid algorithm, AlphaGo won 99.8% of the games it played against other Go programs. Next came the defeat of Hui, who is ranked 633rd in the world. In a blog post last week, Alphabet said that AlphaGo was next scheduled to play against Lee Sedol, the fifth-ranked player in the world, in March.

Although there has been significant progress in the study of neural networks, AlphaGo’s success was somewhat surprising to other researchers. Remi Coulom, who has spent nearly a decade developing a Go-playing algorithm, said inan interviewwithWiredmagazine last year that he thought it would take another 10 years for researchers to design a program that could defeat professionals.

库伦的预测是去年12月的,届时阿尔法戈已经赢得了与专业人士的第一场比赛,但在成绩发布之前。大约在同一时间,Facebook中的一个研究团队在自己的Go-play计划中发布了新的进步,而硅谷的多家公司已经开始将资金倒入人工智能研究中。例如,在11月,丰田建立了一个AI研究实验室,承诺提供10亿美元的资金。

While it might not be the general AI that researchers have chased for decades, AlphaGo’s ability to recognize patterns could have far-reaching applications. DeepMind’s Silver says that the program could be used to scan through healthcare data, make diagnoses, and find new strategies for treatment. Ina Youtube video由...出版Nature, Silver also said that it could be used to enhance Google services, such as a new personal assistant for smartphones and tablets. This contrasts with IBM’s Deep Blue, which was designed specifically for chess.

Neural networks are also being investigated in the study of image recognition and human-computer interactions. John Gianandrea, head of machine learning at Google, who has focused in recent years on self-driving cars, has said that language understanding and summary is the “holy grail” of AI research.

The Defense Advanced Research Projects Agency (DARPA), for instance, is sponsoring research into computer programs that not only extract ideas from words, but also from a person’s tone, facial expression, and gestures. The agency is also relying on games to test computer intelligence. One of the most ambitious goals is collaborative storytelling, in which computers and humans each take turns adding to a story.

“This is a parlor game for humans, but a tremendous challenge for computers,” said Dr. Paul Cohen, a DARPA program manager who has written several books on AI. The point of the research is to make computers more like our partners and assistants, he says—not just tools to be prodded by a few clicks.

Doing so requires making computers more sensitive to the complexities of life. For Hassabis, games are the most natural way to do this. “Most games are fun and were designed because they’re microcosm of some aspect of life,” he says, “and they’re maybe slightly constrained or simplified in some way, but that makes them the perfect challenge as a stepping stone toward building general AI.”

_linear_regulators.png?auto=format&fit=crop&h=139&w=250&q=60)