Memberscan下载这s article in PDF format.

你会学到什么:

- How to build an ML accelerator chip using PCI Express.

- What is the training process for a machine-learning model?

- The emergence of complex models that need multiple accelerators.

Machine-learning (ML), especially deep-learning (DL)-based solutions, are penetrating all aspects of our personal and business lives. ML-based solutions can be found in agriculture, media and advertising, healthcare, defense, law, finance, manufacturing, and e-commerce. On a personal basis, ML touches our lives when we read Google news, play music from our Spotify playlists, in our Amazon recommendations, and when we speak to Alexa or Siri.

由于在商业和消费者用例中的机器学习技术的广泛使用,很明显,为ML应用程序的PLOS应用程序的总运行总成本提供高性能,对部署此类应用的客户提供高性能。因此,有效地处理ML工作负载的芯片,有一个迅速增长的市场。

For some markets such as the data center, these chips can be discrete ML accelerator chips. Given the addressable market, it’s no surprise that the market for discrete ML accelerators is highly competitive. In this article, we will outline how PCIe technology can be leveraged by discrete ML accelerator-chip vendors to make their product stand out in such a hyper-competitive market.

利用PCIe制作符合客户需求的ML加速器芯片

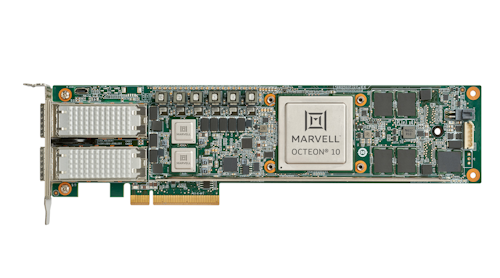

In addition to offering the best performance per watt per dollar for the widest possible set of machine-learning use cases, several capabilities serve as table stakes in the highly competitive ML accelerator market. First, the accelerator solution must be able to attach to as many compute chips as possible from different compute-chip vendors. Choosing a widely adopted chip-to-chip interconnect protocol such as the PCI Express (PCIe) as the accelerator/compute-chip interconnect solution will automatically ensure that the accelerator can attach to almost all available compute chips.

接下来,必须使用Linux和Windows等标准OS可以轻松发现,可编程和可管理的加速器。使加速器PCIe设备自动使用用于PCIe设备定义的枚举流程,配置和管理使用众所周知的编程模型来发现标准OSS。通常,供应商必须为其加速器产品提供设备驱动程序。开发PCIe设备的驱动程序是很好的理解,并且有一个广泛的开源代码和供应商可以用于驱动程序开发的信息。这减少了供应商产品市场的开发成本和时间。

Application software must be able to use the accelerator with minimum software development effort and cost. By turning the accelerator into a PCIe device, well-known robust software methods for accessing and using PCIe devices can be instantly deployed.

随着云计算的扩散,将大量比例的ML的应用程序托管在云上作为虚拟机实例或容器。通过访问ML推断或训练加速器,可以改善这些虚拟机或容器的性能。因此,如果可以虚拟化,则会提高ML加速器在市场上的吸引力,以便其加速能力由多个VM或容器可用。

The industry standard for device virtualization is PCIe technology-based: SR-IOV. In addition, direct assignment of PCIe device functions to VMs is widely supported and used due to the high performance offered. Hence, by implementing PCIe architecture for their accelerator, vendors can address market segments that need high-performance virtualized accelerators.

Training

Before machine-learning models can be deployed in production, they must be trained. The training process for ML, especially deep learning, involves feeding in a huge number of training samples to the model that’s being trained.

In most cases, these samples need to be fetched or streamed from storage systems or the network. Therefore, the time to train to an acceptable level of accuracy will be affected by the bandwidth and latency properties of the link between the ML accelerator and storage systems or networking interfaces. The lower the time to train, the better the accelerator solution will be for customers.

An accelerator can potentially lower the time to train by using PCIe technology’s peer-to-peer traffic capability to stream data directly from the storage device or the network. Using the peer-to-peer capability this way improves performance by avoiding the round trip through the host compute system’s memory for the training samples.

此外,大多数高性能存储节点和网络接口卡(NIC)使用基于PCIe协议的链接连接到系统中的其他组件。因此,通过选择成为符合PCIe的设备,加速器可以通过大多数存储和NIC来实现本地对等流量。

The peer-to-peer capability of PCIe architecture can be useful in the inference and generation side of machine learning as well. For example, in applications like object detection in autonomous driving, a constant stream of camera outputs needs to be fed to the inference accelerator with the lowest latency as possible. In this case, peer-to-peer capability can be used to stream camera data to the inference accelerator with minimal latency.

High-bandwidth requirements for the connections between the ML accelerator chip and compute chips, storage cards, switches, and NICs necessitate high-data-rate serial transmission. As data rates increase and the distance between the chips expands or stays the same, advanced PCB materials and/or reach extension solutions, such as retimers, are required to stay within the channel insertion loss budget.

重压器在PCIe规格中完全定义,可以支持各种复杂的板设计和系统拓扑。因此,使加速器PCIe设备使加速器供应商能够利用PCIe技术生态系统来实现各种客户板设计和系统拓扑中的必要的高数据速率串行传输。

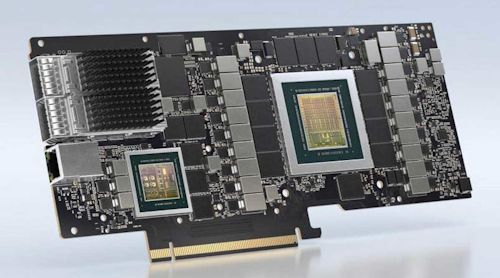

Multiple Accelerators for Complex Models

自然语言处理等机器学习领域的前沿正在朝向极大的复杂模型,如GPT-3(具有175亿参数)。由于参数存储要求和使用这些模型的培训甚至预测或产生(例如语言翻译)的参数存储要求和代表(例如,语言翻译)可以超出单个加速器芯片的计算和内存容量。

Therefore, a system with multiple accelerators becomes necessary when large models like GPT-3 are the preferred choice for the use case. In such multi-accelerator systems, the interconnect between system components needs to offer high bandwidth, be scalable, and be capable of accommodating heterogenous nodes attached to the interconnect fabric.

PCIe technology is a great option for system component interconnect due to its high bandwidth, and its ability to scale by deploying switches. As mentioned previously, the ubiquity of PCIe-based devices allows the same fabric to have NICs, storage devices, and the accelerators. This allows efficient peer-to-peer communication leading to lower time to train, lower inference latency, or higher inference throughput. For multi-accelerator use cases where a low-latency and high-bandwidth inter-accelerator interconnect is desired, the accelerator vendor can take advantage of the PCIe specification’s alternate protocol support to create a custom inter-accelerator interconnect.

在设计加速器以训练GPT-3这样的大型模型或使用这种模型进行推断的另一个重要方面是必须将其传送到加速器的大量功率,以便在最大性能水平下处理这些模型。PCIe规范为系统提供标准化方法,为加速卡提供大量电力。通过使用PCIe技术,Accelerator供应商可以安全地设计一种加速卡,该卡可以消耗PCIe架构标准允许的最大卡,而无需担心从各种系统供应商的系统互操作性。

电源效率

The performance per dollar of an ML solution depends in part on its power efficiency. For example, an inference accelerator might use its link to the compute SoCs only when a new inference request is passed from the compute SoC to the accelerator. For the rest of time, the link is essentially idle. Unless the link has low-power idle states, it will be consuming power unnecessarily by remaining in a high-performance active state.

For maximum efficiency, it’s important that the power consumption of the accelerator’s links to the rest of system scales linearly with the utilization of those links. PCIe offers link power states like L1 and L0p to modulate the power consumption of the link based on idleness and bandwidth usage.

此外,PCIe规范还具有设备空闲功率状态(D-SENALY),标准OS可以利用,通过将加速器置于不需要时,通过将加速器放入睡眠来降低系统的功率。PCIe技术还提供了控制加速器的有效功耗的能力。因此,PCIe规范使加速器能够对系统的总功率效率积极贡献。

PCIe and RAS

人工智能数据中心部署的加速器,再保险liability, availability, and serviceability (RAS) features are necessary in all system components, including accelerators. In addition, to be usable in practice, such RAS features must comply to what standard OSes and platform firmware expects. The PCIe architecture offers a rich suite of OS-friendly RAS features, including advanced error reporting, the ability to do hot add and removal of PCIe devices, etc. Therefore, choosing PCIe-based links as the means to connect to other system components helps the accelerator product meet the data-center market’s RAS needs.

PCIe规范向AI Accelerator供应商提供的一个重要优势是能够将相同的解决方案重新结合到几个不同的市场段。这是通过利用PCIe技术的两个特征来完成的:不同的形状因素的可用性和PCIe规范接口的能力具有不同的链路宽度。这允许供应商按比例缩放接口带宽,功耗和形成范围的形式因子与市场段所需的加速度能力成比例。

Confidentiality and integrity of the data that’s transferred to and from the accelerator is important for most customers. The PCIe specification has recently introduced integrity protection and data encryption (IDE) for data transfers over PCIe links. An accelerator vendor can leverage PCIe IDE to provide end-to-end confidentiality and integrity for data.

培训和推理工作流程都可以涉及计算SOC中的CPU核心和以协作方式执行ML应用程序的加速器。这需要计算SOC和加速器之间的高带宽,低延迟通信信道。基于PCIe的链接是本申请的理想选择。通过利用PCIe规范的替代协议对定制芯片到芯片通信协议的支持,甚至可能实现甚至降低延迟和更高的带宽效率。

由于ML加速器市场的竞争性,最大限度地减少市场的时间对于加速器供应商很重要。利用PCIe技术可以帮助这方面有助于提供高质量的PCIe IP,可以利用可用于快速芯片设计和验证。供应商可以轻松访问合规性测试服务,以确保其芯片连接到所有PCIe技术兼容的计算系统,并且他们可以访问大型PCIe架构专家。

结论

如前所述,机器 - 学习(特别是深度学习)模型的规模和复杂性增长。为了在计算和内存容量方面跟上这种趋势,具有多个互连的加速器芯片的系统将越来越必要。芯片到Chip互连性能需要随着计算和内存容量而增大,以实现这种系统的真正性能潜力。

为芯片到Chip互连选择PCIe技术有助于供应商利用每个新一代PCIe技术带来市场的带宽增加。例如,PCIe 6.0被投影为提供64 GTransfers / s的数据速率,这是PCIe 5.0数据速率的双倍。

总之,采用PCIe规范将使ML Accelerator供应商能够:

- Develop market-leading accelerators with lower risk and faster time to market.

- Have a robust pathway to keep up with workload demands for scaling of chip-to-chip interconnect bandwidth.

_and_Applications.png?auto=format&fit=crop&h=139&w=250&q=60)